At the University of Luxembourg, researchers from the (SnT) are working on making artificial intelligence understandable. This research area is known as explainable artificial intelligence (XAI).

Why make AI explainable?

, a chief scientist in software engineering and mobile security, works within the research group at SnT. The group specialises in researching innovative approaches in trustworthy software. One of his current projects is seeing him and his colleagues apply XAI to FinTech. They want to develop AI algorithms following XAI principles, to provide solutions to banking processes, so bank experts can understand the decisions an AI makes.

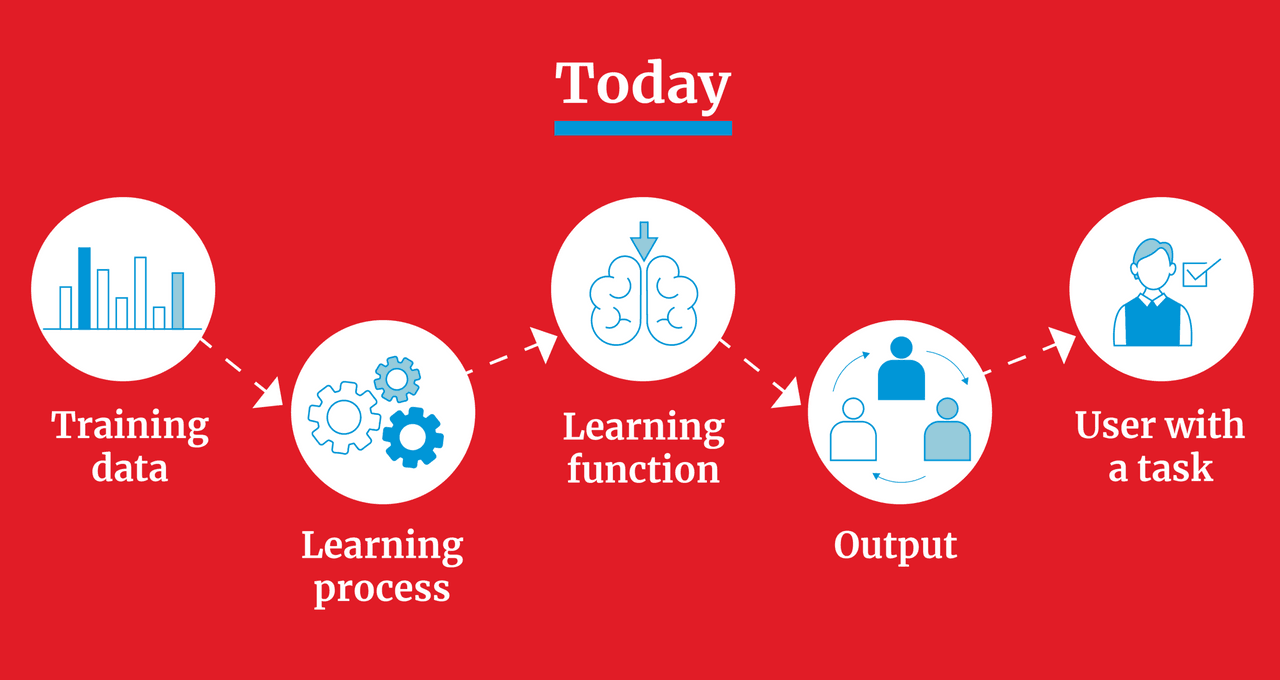

How AI operates nowadays (Illustration : Maison Moderne)

Prof. Klein’s research team is working with on developing explainability for financial machine learning algorithms. His work involves looking at exactly how AI algorithms actually make decisions. The goal is that a human should be able to understand how the software came to a decision. Several factors are pushing the XAI concept forward, including regulations such as GDPR, and the needs of auditors.

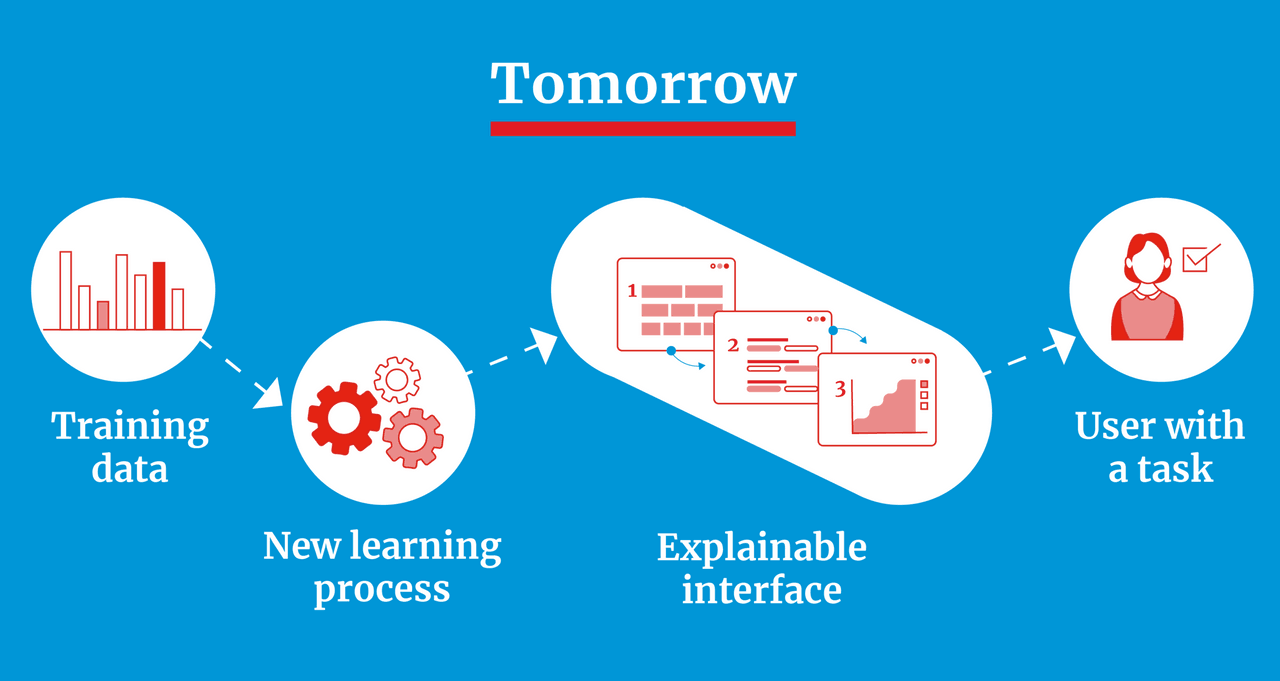

How explainable AI is game-changing (Illustration : Maison Moderne)

Beyond this, explainable AI has wide-ranging applications. It can even help to inform and refine a machine learning algorithm further, as you can see how it’s performing and tweak the model accordingly.

Features of a decision

It’s possible to view artificial intelligence as having two main subtypes: traditional AI and deep learning, where the algorithm itself decides the relevant features to identify something or someone. “Often, deep learning gives a better performance score in terms of accuracy and precision recall,” Prof. Klein noted.

However, there is a trade-off with this method: it provides better results but is less explainable.

The AI field is still working hard to try and make deep learning explainable. “Deep learning is very complex and employs several layers of computation,” he explained. “Few people really understand what is going on inside.” Often, the deep learning AI notices a correlation that it misinterprets or overemphasises, he explained.

Prof. Klein stated that more work is needed in this area. Researchers in the public and private sectors around the world, including big internet companies like Google, Facebook and Amazon, are working hard on furthering the capabilities of an AI. “This means that new solutions are coming every day, “ he said. “What we cannot do today, maybe we will be able to do tomorrow.”